At the time of this writing, in July 2020, the COVID-19 pandemic has killed over 133,000 people in the United States. The dead are disproportionately Black and Latinx people and those who were unable, or not allowed by their employers, to work remotely. During the pandemic, we’ve seen our technological infrastructures assume ever more importance—from the communications technology that allows people with the means and privilege to telecommute, to the platforms that amplify public health information or deadly, politicized misinformation. We’ve also seen some of the infrastructure that runs the social safety net break down under an increasing load. This includes state unemployment systems that pay workers the benefits they’ve contributed to for decades through taxes. In a global pandemic, being able to work from home, to quit and live on savings, or to be laid off and draw unemployment benefits has literally become a matter of life and death.

The cracks in our technological infrastructure became painfully evident in the spring, as US corporations responded to the pandemic by laying off more and more workers. So many people had to file for unemployment at once that computerized unemployment claim systems started to malfunction. Around the country, phone lines jammed, websites crashed, and millions of people faced the possibility of not being able to pay for rent, medicine, or food.

As the catastrophe unfolded, several state governments blamed it on aged, supposedly obsolete computer systems written in COBOL, a programming language that originated in the late 1950s. At least a dozen state unemployment systems still run on this sixty-one-year-old language, including ones that help administer funds of a billion dollars or more in California, Colorado, and New Jersey. When the deluge of unemployment claims hit, the havoc it seemed to wreak on COBOL systems was so widespread that many states apparently didn’t have enough programmers to repair the damage; the governor of New Jersey even publicly pleaded for the help of volunteers who knew the language.

But then something strange happened. When scores of COBOL programmers rushed to offer their services, the state governments blaming COBOL didn’t accept the help. In fact, it turned out the states didn’t really need it to begin with. For many reasons, COBOL was an easy scapegoat in this crisis—but in reality what failed wasn’t the technology at all.

A “Dead” Language is Born

One of the most remarkable things about the unemployment claims malfunction wasn’t that things might suddenly go terribly wrong with COBOL systems, but that they never had before. Many computerized government and business processes around the world still run on and are actively written in COBOL—from the programs that reconcile almost every credit card transaction around the globe to the ones that administer a disability benefits system serving roughly ten million US veterans. The language remains so important that IBM’s latest, fastest “Z” series of mainframes have COBOL support as a key feature.

For six decades, systems written in COBOL have proven highly robust—which is exactly what they were designed to be. COBOL was conceived in 1959, when a computer scientist named Mary Hawes, who worked in the electronics division of the business equipment manufacturer Burroughs, called for a meeting of computer professionals at the University of Pennsylvania. Hawes wanted to bring industry, government, and academic experts together to design a programming language for basic business functions, especially finance and accounting, that was easily adaptable to the needs of different organizations and portable between mainframes manufactured by different computer companies.

The group that Hawes convened evolved into a body called CODASYL, the Conference on Data Systems Languages, which included computer scientists from the major computing hardware manufacturers of the time, as well as the government and the military. CODASYL set out to design a programming language that would be easier to use, easier to read, and easier to maintain than any other programming language then in existence.

The committee’s central insight was to design the language using terms from plain English, in a way that was more self-explanatory than other languages. With COBOL, which stands for “Common Business-Oriented Language,” the goal was to make the code so readable that the program itself would document how it worked, allowing programmers to understand and maintain the code more easily.

COBOL is a “problem-oriented” language, whose structure was designed around the goals of business and administrative users, instead of being maximally flexible for the kind of complex mathematical problems that scientific users would need to solve. The main architect of COBOL, Jean Sammet, then a researcher at Sylvania Electric and later a manager at IBM, wrote, “It was certainly intended (and expected) that the language could be used by novice programmers and read by management.” (Although the pioneering computer scientist Grace Hopper has often been referred to as the “mother of COBOL,” her involvement in developing the specification for the language was minimal; Sammet is the one who really deserves the title.)

Other early high-level programming languages, such as FORTRAN, a language for performing complex mathematical functions, used idiosyncratic abbreviations and mathematical symbols that could be difficult to understand if you weren’t a seasoned user of the language. For example, while a FORTRAN program would require programmers to know mathematical formula notation, and write commands like:

TOTAL = REAL(NINT(EARN * TAX * 100.0))/100.0

users of COBOL could write the same command as:

MULTIPLY EARNINGS BY TAXRATE GIVING SOCIAL-SECUR ROUNDED.

As you can tell from the COBOL version, but probably not from the FORTRAN version, this line of code is a (simplified) example of how both languages could compute a social security payment and round the total to the penny. Because it was designed not just to be written but also to be read, COBOL would make computerized business processes more legible, both for the original programmers and managers and for those who maintained these systems long afterwards.

A portable, easier-to-use programming language was a revolutionary idea for its time, and prefigured many of the programming languages that came after. Yet COBOL was almost pronounced dead even before it was born. In 1960, the year that the language’s specification was published, a member of the CODASYL committee named Howard Bromberg commissioned a little “COBOL tombstone” as a practical joke. Bromberg, a manager at the electronics company RCA who had earlier worked with Grace Hopper on her FLOW-MATIC language, was concerned that by the time everybody finally got done designing COBOL, competitors working on proprietary languages would have swept the market, locking users into programming languages that might only run well on one manufacturer’s line of machines.

But when COBOL came out in 1960, less than a year after Mary Hawes’s initial call to action, it was by no means dead on arrival. The earliest demonstrations of COBOL showed the language could be universal across hardware. “The significance of this,” Sammet wrote, with characteristic understatement, was that it meant “compatibility could really be achieved.” Suddenly, computer users had a full-featured cross-platform programming language of far greater power than anything that came before. COBOL was a runaway success. By 1970, it was the most widely used programming language in the world.

Scapegoats and Gatekeepers

Over the subsequent decades, billions and billions of lines of COBOL code were written, many of which are still running within financial institutions and government agencies today. The language has been continually improved and given new features. And yet, COBOL has been derided by many within the computer science field as a weak or simplistic language. Though couched in technical terms, these criticisms have drawn on a deeper source: the culture and gender dynamics of early computer programming.

During the 1960s, as computer programming increasingly came to be regarded as a science, more and more men flooded into what had previously been a field dominated by women. Many of these men fancied themselves to be a cut above the programmers who came before, and they often perceived COBOL as inferior and unattractive, in part because it did not require abstruse knowledge of underlying computer hardware or a computer science qualification. Arguments about which languages and programming techniques were “best” were part of the field’s growing pains as new practitioners tried to prove their worth and professionalize what had been seen until the 1960s as rote, unintellectual, feminized work. Consciously or not, the last thing many male computer scientists entering the field wanted was to make the field easier to enter or code easier to read, which might undermine their claims to professional and “scientific” expertise.

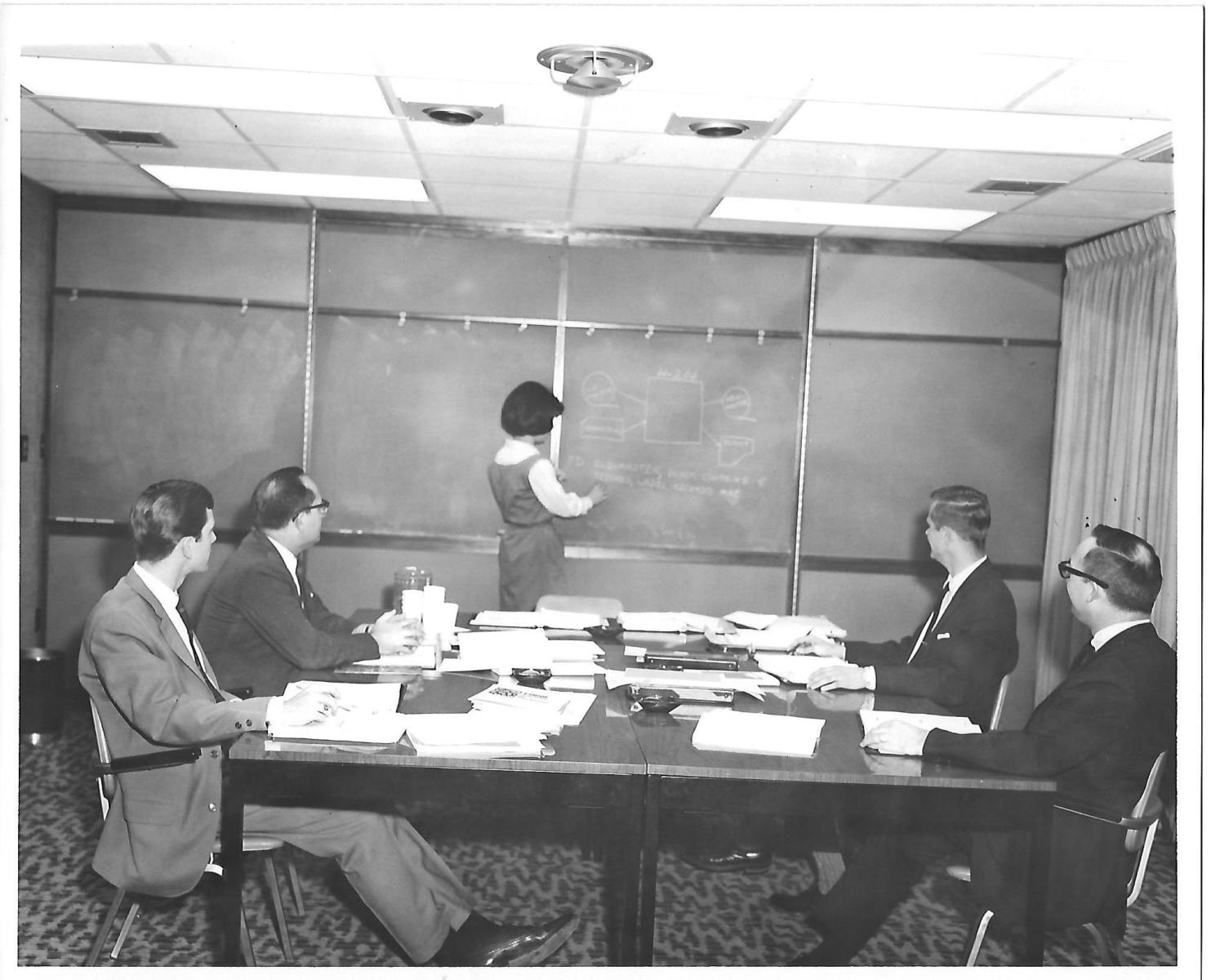

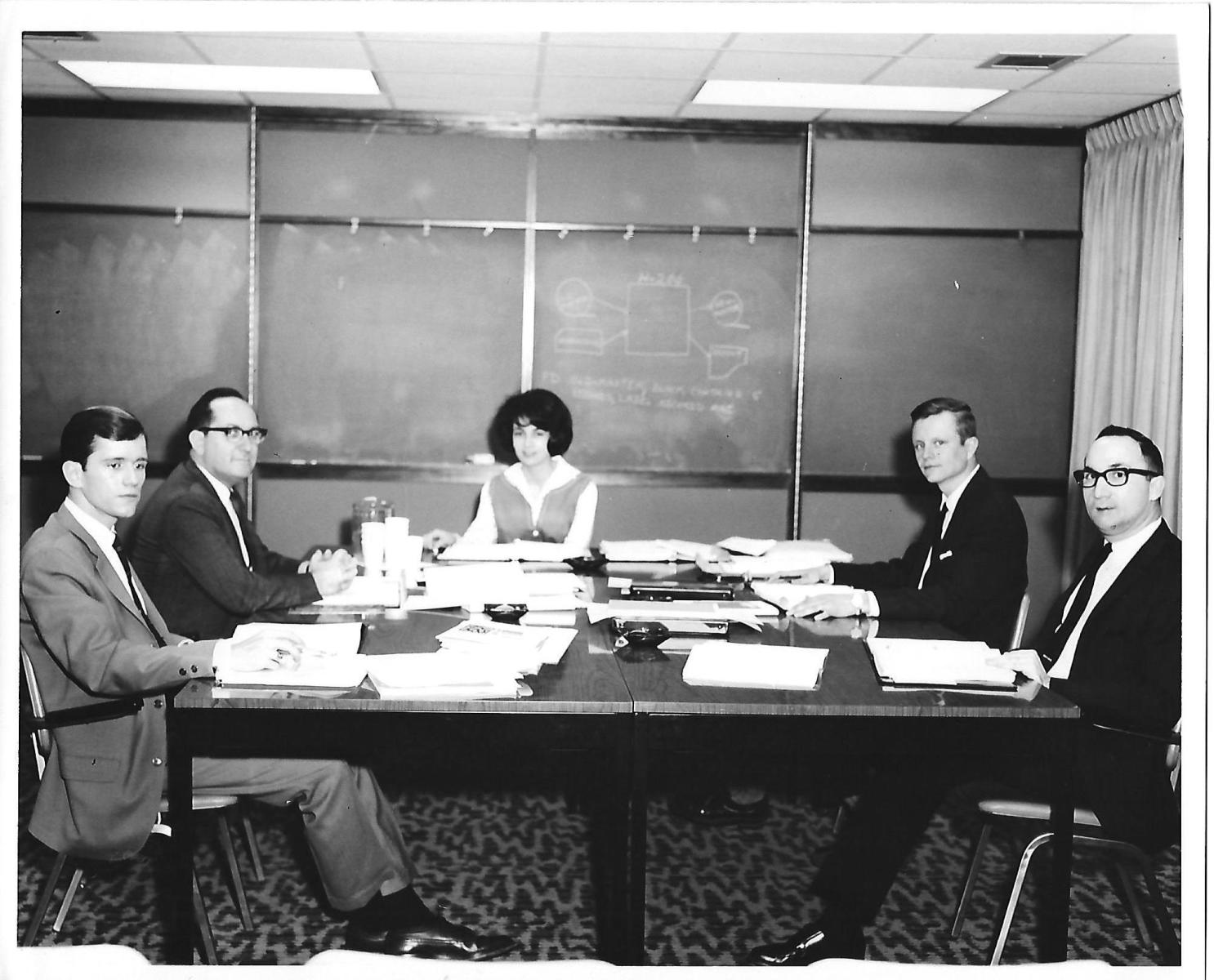

At first, however, the men needed help. Looking back, we see many examples of women teaching men how to program, before women gradually receded from positions of prominence in the field. Juliet Muro Oeffinger, one of about a dozen programmers I interviewed for this piece, began programming in assembly language in 1964 after graduating college with a BA in math. “When COBOL became the next hot thing,” she said, “I learned COBOL and taught it for Honeywell Computer Systems as a Customer Education Rep.” In the images below, Oeffinger teaches a room full of men at the Southern Indiana Gas and Electric Company how to program in the language. Within a short time, these trainees—who had no prior experience with computer work of any kind—would have been programming in COBOL.

Another retired programmer I spoke to named Pam Foltz noted that good COBOL was great long-term infrastructure, because it was so transparent. Almost anyone with a decent grasp of programming could come into a COBOL system built by someone else decades earlier and understand how the code worked. Foltz had a long career as a programmer for financial institutions, retraining in COBOL soon after getting her BA in American studies from the University of North Carolina at Chapel Hill in the 1960s. Perhaps this dual background is one reason why her code was so readable; as one of her supervisors admiringly told her, “You write COBOL like a novel! Anyone could follow your code.”

Ironically, this accessibility is one of the reasons that COBOL was denigrated. It is not simply that the language is old; so are many infrastructural programming languages. Take the C programming language: it was created in 1972, but as one of the current COBOL programmers I interviewed pointed out, nobody makes fun of it or calls it an “old dead language” the way people do with COBOL. Many interviewees noted that knowing COBOL is in fact a useful present-day skill that’s still taught in many community college computer science courses in the US, and many colleges around the world.

But despite this, there’s a cottage industry devoted to making fun of COBOL precisely for its strengths. COBOL’s qualities of being relatively self-documenting, having a short onboarding period (though a long path to becoming an expert), and having been originally designed by committee for big, unglamorous, infrastructural business systems all count against it. So does the fact that it did not come out of a research-oriented context, like languages such as C, ALGOL, or FORTRAN.

In a broader sense, hating COBOL was—and is—part of a struggle between consolidating and protecting computer programmers’ professional prestige on the one hand, and making programming less opaque and more accessible on the other. There’s an old joke among programmers: “If it was hard to write, it should be hard to read.” In other words, if your code is easy to understand, maybe you and your skills aren’t all that unique or valuable. If management thinks the tools you use and the code you write could be easily learned by anyone, you are eminently replaceable.

The fear of this existential threat to computing expertise has become so ingrained in the field that many people don’t even see the preference for complex languages for what it is: an attempt to protect one’s status by favoring tools that gate-keep rather than those that assist newcomers. As one contemporary programmer, who works mainly in C++ and Java at IBM, told me, “Every new programming language that comes out that makes things simpler in some way is usually made fun of by some contingent of existing programmers as making programming too easy—or they say it’s not a ‘real language.’” Because Java, for example, included automatic memory management, it was seen as a less robust language, and the people who programmed in it were sometimes considered inferior programmers. “It's been going on forever,” said this programmer, who has been working in the field for close to thirty years. “It's about gatekeeping, and keeping one’s prestige and importance in the face of technological advancements that make it easier to be replaced by new people with easier to use tools.” Gatekeeping is not only done by people and institutions; it’s written into programming languages themselves.

In a field that has elevated boy geniuses and rockstar coders, obscure hacks and complex black-boxed algorithms, it’s perhaps no wonder that a committee-designed language meant to be easier to learn and use—and which was created by a team that included multiple women in positions of authority—would be held in low esteem. But modern computing has started to become undone, and to undo other parts of our societies, through the field’s high opinion of itself, and through the way that it concentrates power into the hands of programmers who mistake social, political, and economic problems for technical ones, often with disastrous results.

The Labor of Care

A global pandemic in which more people than ever before are applying for unemployment is a situation that COBOL systems were never designed to handle, because a global catastrophe on this scale was never supposed to happen. And yet, even in the midst of this crisis, COBOL systems didn’t actually break down. Although New Jersey’s governor issued his desperate plea for COBOL programmers, later investigations revealed that it was the website through which people filed claims, written in the comparatively much newer programming language Java, that was responsible for the errors, breakdowns, and slowdowns. The backend system that processed those claims—the one written in COBOL—hadn’t been to blame at all.

So why was COBOL framed as the culprit? It’s a common fiction that computing technologies tend to become obsolete in a matter of years or even months, because this sells more units of consumer electronics. But this has never been true when it comes to large-scale computing infrastructure. This misapprehension, and the language’s history of being disdained by an increasingly toxic programming culture, made COBOL an easy scapegoat. But the narrative that COBOL was to blame for recent failures undoes itself: scapegoating COBOL can’t get far when the code is in fact meant to be easy to read and maintain.

That said, even the most robust systems need proper maintenance in order to fix bugs, add features, and interface with new computing technologies. Despite the essential functions they perform, many COBOL systems have not been well cared for. If they had come close to faltering in the current crisis, it wouldn’t have been because of the technology itself. Instead, it would have been due to the austerity logic to which so many state and local governments have succumbed.

In order to care for technological infrastructure, we need maintenance engineers, not just systems designers—and that means paying for people, not just for products. COBOL was never meant to cut programmers out of the equation. But as state governments have moved to slash their budgets, they’ve been less and less inclined to pay for the labor needed to maintain critical systems. Many of the people who should have been on payroll to maintain and update the COBOL unemployment systems in states such as New Jersey have instead been laid off due to state budget cuts. As a result, those systems can become fragile, and in a crisis, they’re liable to collapse due to lack of maintenance.

It was this austerity-driven lack of investment in people—rather than the handy fiction, peddled by state governments, that programmers with obsolete skills retired—that removed COBOL programmers years before this recent crisis. The reality is that there are plenty of new COBOL programmers out there who could do the job. In fact, the majority of people in the COBOL programmers’ Facebook group are twenty-five to thirty-five-years-old, and the number of people being trained to program and maintain COBOL systems globally is only growing. Many people who work with COBOL graduated in the 1990s or 2000s and have spent most of their twenty-first century careers maintaining and programming COBOL systems.

If the government programmers who were supposed to be around were still on payroll to maintain unemployment systems, there’s a very good chance that the failure of unemployment insurance systems to meet the life-or-death needs of people across the country wouldn't have happened. It’s likely those programmers would have needed to break out their Java skills to fix the issue, though. Because, despite the age of COBOL systems, when the crisis hit, COBOL trundled along, remarkably stable.

Indeed, present-day tech could use more of the sort of resilience and accessibility that COBOL brought to computing—especially for systems that have broad impacts, will be widely used, and will be long-term infrastructure that needs to be maintained by many hands in the future. In this sense, COBOL and its scapegoating show us an important aspect of high tech that few in Silicon Valley, or in government, seem to understand. Older systems have value, and constantly building new technological systems for short-term profit at the expense of existing infrastructure is not progress. In fact, it is among the most regressive paths a society can take.

As we stand in the middle of this pandemic, it is time for us to collectively rethink and recalculate the value that many so-called tech innovations, and innovators, bring to democracy. When these contributions are designed around monetizing flaws or gaps in existing economic, social, or political systems, rather than doing the mundane, plodding work of caring for and fixing the systems we all rely on, we end up with more problems than solutions, more scapegoats instead of insights into where we truly went wrong.

There are analogies between the failure of state unemployment systems and the failure of all sorts of public infrastructure: Hurricane Sandy hit the New York City subway system so hard because it, too, had been weakened by decades of disinvestment. Hurricane Katrina destroyed Black lives and neighborhoods in New Orleans because the levee maintenance work that was the responsibility of the federal government was far past due, a result of racist resource allocation. COVID-19 continues to ravage the United States more than any other nation because the federal infrastructure needed to confront public health crises has been hollowed for decades, and held in particular contempt by an Administration that puts profits over people, and cares little, if at all, about the predominantly Black and Latinx people in the US who are disproportionately dying.

If we want to care for people in a pandemic, we also have to be willing to pay for the labor of care. This means the nurses and doctors who treat COVID patients; the students and teachers who require smaller, online classes to return to school; and the grocery workers who risk their lives every day. It also means making long-term investments in the engineers who care for the digital infrastructures that care for us in a crisis.

When systems are built to last for decades, we often don’t see the disaster unfolding until the people who cared for those systems have been gone for quite some time. The blessing and the curse of good infrastructure is that when it works, it is invisible: which means that too often, we don’t devote much care to it until it collapses.