Though you’re currently an artist and professor, you were originally trained as a water engineer. What does that mean?

My first degree was in environmental engineering and I specialized in water, particularly stormwater. This is an area of engineering concerned with how cities, roads, and landscapes are designed to deal with runoff and water flows. It’s an area that overlaps with hydrology, which is the study of how water interacts with the environment — how we model and predict rainfall and what it’s going to do on the ground. It also overlaps with urban design, because obviously a road is not just a thing for cars to drive on, but also a mechanism to manage water flows in a storm.

Every gutter or drain or pipe is the materialization of a process of data collection, estimation, and modeling and is therefore a wager on environmental variability. How much water might fall? How often? And what is the biggest or smallest rain event the system must be able to deal with? I was involved in designing those sorts of systems.

Towards the end of my time working in that industry in Australia, I was in an area of environmental engineering called “water-sensitive urban design” where we were trying to design living systems to improve water quality. Specifically, we were working on improving the composition and quality of stormwater that flowed from housing developments to rivers or oceans downstream. Instead of designing a pollutant trap made out of concrete, we would try to imitate how a natural watershed would work and design a wetland so that stormwater would flow through it and hang out there for a few days before flowing into a creek. In this way, the water would both get filtered and support wildlife and plant growth along the way.

How did you go from doing that engineering work to making art?

There’s license in the arts to question very normative assumptions. The engineering approach of designing infrastructures as living systems does still give me hope for how we might rethink human systems more broadly. But I continue to feel quite frustrated with the way that engineering as a discipline tends to frame problems as technical challenges. You’re supposed to scope out the political and social forces that are causing an environmental problem, and just slap a technical fix on the end of it. Even the work I was doing — that really nice, innovative, environmental work — was facilitating terrible housing developments full of huge McMansions. It seemed like my job was to make these wildly unsustainable projects just a little bit less bad.

So I started to get more and more interested in different kinds of questions. Like, who and what do we value? What do we think we need in order to have a good life? These weren’t questions we asked as engineers, but they were questions I could ask as an artist.

For example, as an engineer, your goal is to minimize risk to humans living in the environment, and to do this, you have to adhere to regulations such as human health standards. But the cost may be the capacity of other, nonhuman species to live and flourish. At some point, you have to think about how you weigh that cost. We urgently need to expand the definition of human health to also include the fates of other life forms. There was very little room in the space I was working in to explore these assumptions and the cost of designing from a solely human-centric perspective.

Unlearning Engineering

Decentering the human feels like a theme throughout your work.

One of my earliest pieces was called “Coin-Operated Wetland.” It was an installation that recreated what I was doing as an engineer, but in a gallery space. I built a system where a washing machine was connected to a wetland. The whole installation was a closed system, where the water that was used to wash clothes ultimately ended up in the wetland and then circulated back to the washing machine. What if you show people that there is no downstream? What if you’re confronted with the life forms that are directly impacted by your actions?

The night before that show opened, I was incredibly stressed because working with water is so hard! With software-based work, if you get a glitch, you edit the code and even use the mistake to inform the aesthetic of the work. But mistakes in a system involving water can result in, you know, flooding.

Also, in order for a living water treatment system to work, you can’t use disinfectants like chlorine because it will kill all the bacteria and plants. So from a human health perspective, that system didn’t actually comply with health standards and I kept thinking, “Oh my God, someone’s going to put their hand in it and put their hand in their mouth, and I’m going to get sued.” That was the engineer in me, thinking about risk minimization.

In the end, the system was actually pretty successful; the plants were happy and, even though the laundry water wasn’t treated to drinking water standards, the T-shirts we put in the laundromat came out looking clean. Of course, it required more maintenance and labor than a washing machine you’d have in your house, and it was less efficient by some measures; we could only do one load per day because that’s the pace at which the plants could consume the water. But if we’re going to shift away from seeing ecosystems strictly as service providers and towards a more negotiated, reciprocal relationship with them, our systems are going to need a little more give. That project was about seeking a balance, and exploring how to build infrastructures that are not optimized for humans alone.

I mean, you’d never be allowed to build something like that as an engineer. The client would sue you.

That balancing act reminds me of something engineer and professor Deb Chachra wrote in one of her newsletters. She wrote, “Sustainability always looks like underutilization when compared to resource extraction.”

That’s beautiful. Deb also writes about infrastructure as being care at scale, which I think is a nice way to think about it. Could there be a model where infrastructures don’t just care for humans, but also care for the ecosystems where they’re acting?

I’m obsessed with water leaks for that reason. If you look at a water pipe at the point where it’s leaking, you usually have these little gardens popping up, all these little ecosystems that are taking advantage of the water supply. There’s been fascinating research published on how leaks from water distribution systems in cities actually recharge groundwater aquifers because most of these systems leak 10 to 30 percent of their water.

Of course, there’s also research going on at MIT and all these engineering schools on how to to develop little autonomous robots that go into the pipes and find the leaks and plug them up. From the perspective of design and engineering, the system is not supposed to be porous; leaks are a problem, an inefficiency. But it actually takes more than just humans to make the city. What about the street trees that depend on those leaks? So then the question becomes: is there a way we can share resources with other species rather than completely monopolizing them?

The shift from looking at unintended side effects, of leaks for example, to intentionally surfacing or creating side effects reminds me of your project “Unfit Bits.” You and your collaborator Surya Mattu demonstrate all these ways to “hack” a Fitbit by making it register steps when you’re not actually taking them, such as attaching the Fitbit to a dog’s collar or the wheel of a bicycle. That project is about deliberate subversion — side effects intended by the hacker, but not by the creator of the system being hacked.

I haven’t really thought about leaks and “Unfit Bits” together, but that project is also about the manipulation of infrastructure and the deliberate glitching of a dataset for a different political end. Whether you look at leaks in a water system or leaks in an information system like the Panama Papers or the Snowden leaks, both are about a redistribution of power.

In the context of fitness trackers and employer-provided health and life insurance, the pitch is that tracker data provides an accurate picture of a person’s health. That’s a very political claim, especially in the US where access to insurance is commodified and not universal. As a result, employers are handing over employee fitness data to private insurance companies as part of workplace wellness programs, and some of them even penalize their employees for refusing to wear these trackers. Life insurance companies are using Fitbit data to help determine premiums. You’ve got this very fraught situation where a dataset is playing a critical role in how people get access to essential services.

With “Unfit Bits," you author your own dataset and optimize it for what you want rather than being subject to what it says is the objective reality of your life. If you want to see how a system works, look at what the system deems inaccurate or inefficient. Pay attention to what’s called an error. In a water system, it’s a leak. With a Fitbit, it’s anything that doesn’t meet the narrow definition of a step. On the flip side, many gestures that aren’t steps get measured as steps. “Unfit Bits” exploits that.

With both leaks and glitches, you’re poking holes in the idea that systems are perfectly closed and objective.

Yes, and it’s also me trying to unlearn an engineering worldview. When you’re trained as an engineer, you’re taught that you’re going to make a system that solves a problem. Very rarely do you get to the point of asking: is the problem we’re solving for the same for everyone? Who gets to decide what qualifies as a problem and what are the tradeoffs in how it is defined? There are these universal ideas of what’s efficient. Well, efficient for whom?

Not looking at the world as an engineer is also about embracing inefficiencies or using them to to tease out what we call success in a system.

Simulation Machines

Part of what makes “Unfit Bits” work is the absurdity of putting a Fitbit on a dog collar to convince the device that you ran five miles. Your project “Asunder” also uses absurdity, but to make a point about optimizing the environment. Tell me about that project.

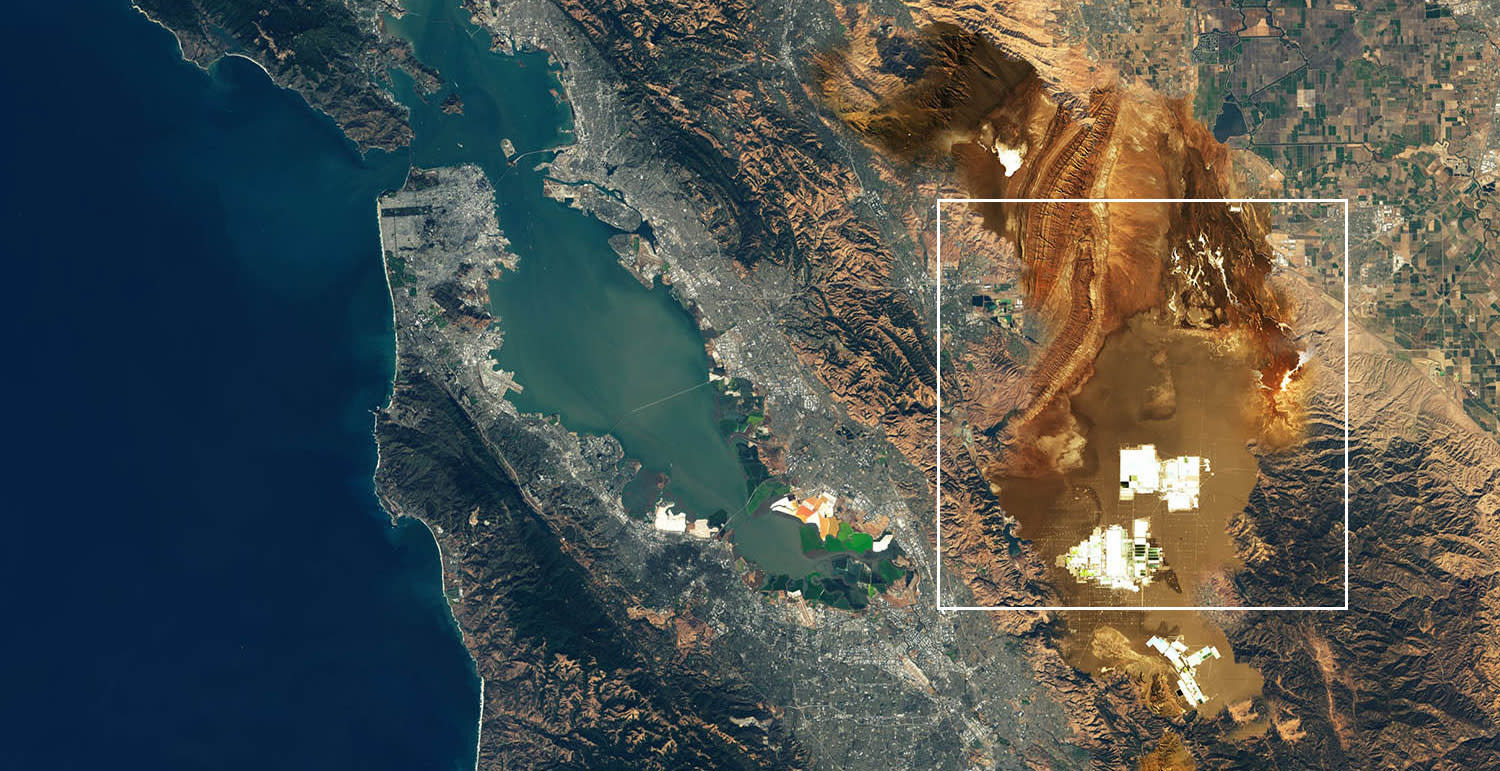

It’s basically a simulation machine. Julian Oliver, Bengt Sjölén, and I built a computing system to generate fictional geoengineering proposals. The work cycles through publicly available satellite imagery of the world — tiles taken from the Landsat 5, 7 and 8 satellites (6 got lost). From that dataset, we chose a series of sites that have gone through significant change over the last thirty to forty years and we show these places using the historic satellite tiles for that site.

From there, our computer generates preposterous scenarios for geoengineering that site, like rerouting a river or recombining the site with another site from across the world. It generates lots of scenarios using a GAN — a machine learning method that uses existing images to generate new images — to stitch the satellite tiles together. You end up with these surreal, dreamlike Landsat tiles that are made up of edited landscapes. Then the system chooses one possibility, analyzes the land use changes in it, and inputs that data into a climate model to estimate how the change would impact the environmental performance of the earth overall.

One of our sites is Silicon Valley. The system generated a geoengineering scenario where it took a lithium mining region in Chile and transplanted it into Silicon Valley. In another scenario, a region in Antarctica is recombined with an area of industrial agriculture in the US Midwest. Using the climate model, the simulation then gradually tries to estimate what that would mean, climate-wise.

The project takes the solutionism that you hear in geoengineering spaces to the most ridiculous extreme possible. These solutions are totally not viable.

I know it’s generating ridiculous scenarios, but I can also imagine the EPA or NASA wanting to do exactly this.

Yeah, and if you look at climate forecasts a hundred years out, there’s a lot of extremely bizarre land use changes that are predicted unless we can drastically change our systems of production. An ice-free Arctic. Agricultural areas shifting to higher latitudes as frozen areas in Russia and Canada thaw. The complete desertification of agricultural areas that are viable today. And if that’s not bad enough, all of the southern wine-growing regions in Europe are predicted to become too dry to support wine production anymore.

This is the information that’s coming from the scientific community today. So although the system we built with “Asunder” generates scenarios that feel preposterous, we live at a moment where scientists are predicting an even more catastrophic future.

We’re all trying to assimilate that view of our future. I think anybody who’s done even a little bit of reading on the subject must feel a deep sense of dislocation. The narratives we grew up with around modernity and technology and progress are really at odds with what the science is telling us.

How did you all decide on satellite imagery and machine learning as the mediums for this piece?

They’re part of a long tradition. The history of weather prediction is entwined with the history of computation: the first electronic computer, the ENIAC, was a military technology developed in the 1940s, and then put to civilian use. One of the use cases was weather prediction; in the 1950s John von Neumann developed the first weather prediction techniques on the ENIAC. So this is a long-running historic project that has produced the knowledge of how we’ve changed the composition of the atmosphere and what that means. We wouldn’t be able to understand climate change if we didn’t have these technologies. They have given us a view of the world that would otherwise be impossible.

At the same time, these simulations of the world can make you forget that there are other ways to know. Computing is so seductive that way. It makes you forget that there’s always something the simulation can’t capture. And it turns out that all these decisions have to be made about how the simulation or the model is built, and those all impact the end result.

“Asunder” is also connected with work I’ve been doing in the past year or so around machinic perspectives of environmental systems and what looking at the environment as a computer does. How does it make us see the biosphere, and how does it produce and foreclose certain possibilities?

These questions are really important, because we have some urgent environmental challenges to deal with. And this is happening at the exact moment where we also have a surplus of computation. Everyone’s like, “What can we do with all this computation? I know! We can solve climate change and extinction and whatever!”

You’ve been making work about the environment, nonhumans, and environmental timescales for a decade. My sense is that the language of “climate crisis” and “extinction events” has recently become mainstream in a way that it wasn’t before. What has it been like to watch that happen? Has it changed how your work is received?

It’s a huge relief to see these issues become more widely discussed and to see organizing and activist work happening in the mainstream. We need more of that, and we need our collective action to be more extreme because the political class has decided to leverage denial for their economic gain. There’s no question that we need to be doing everything we can to take power away from those people. It’s horrifying that denial and doubt are being used very strategically by powerful people not only in the US, but also in Australia where I’m from.

I’d love to see much more experimentation around how to reconfigure relations and trouble the human-centeredness of technologies and infrastructures. I’m excited to see more work that takes up what it means to attempt to optimize an environment. So often, we think about data in service of prediction and control as the primary way to encounter an environment. And yet there are so many other ways to know a place.